AI Policy & Governance, Privacy & Data

CDT and Berkeley Research Reveals Important Insight into Fairness in Online Personalization

Personalization is the gold standard of modern business models. These days, every service aims to provide an individually tailored experience that anticipates the preferences and needs of customers (and potential customers), hoping to establish loyalty in a competitive environment for consumer attention. Advertisements, search results, and even prices are among the content altered to maximize relevance for each user and profit for the company. We might resent the judgments being made about us when we see the tenth advertisement for egg-freezing services in one day, or rejoice when our vague Google search somehow gets exactly the results we needed, but does it matter to us how exactly these decisions are made? Recent research suggests that it does.

CDT partnered with a team from the UC Berkeley School of Information to analyze how individuals feel about personalization. Funded by a grant from the Berkeley Center for Technology, Society, and Policy and the Berkeley Center for Long-Term Cybersecurity, we looked at multiple contexts for personalization — search, pricing, and advertising — as well as different data types that can be used, such as race, gender, location, and income. The research paper touches on a number of findings; in this shorter description, we’ll discuss our results that implicate the use of gender.

Research Design

It’s not obvious what pieces of a website have been tailored to you. And even when you think something is targeted at you, it is not often clear just how “personal” the personalization is. Content can be tailored based on non-controversial pieces of information, like the city associated with your IP address — or it might be targeted to you personally based on extensive catalogues of data about you and “people like you.” The technical processes powering online personalization are obscure and complicated, adding another layer of confusion. Rather than trying to demystify the process, this research design cuts to the core questions around fairness in personalization.

The research team created a survey instrument that allowed people to respond to different personalization scenarios on a 1-5 scale rating of fairness (they also collected responses on scales of trustworthiness, comfort, and acceptability as well as baseline demographic and other statistical data). They received 748 responses and have prepared a detailed analysis that will be presented at this year’s Privacy Law Scholars Conference. CDT’s Privacy & Data team worked with the researchers to develop the instrument and interpret the results. The resulting paper, A User-Centered Perspective on Algorithmic Personalization, is over fifty pages (and still in progress), so this blog post presents one narrow slice of the results as an illustration of the powerful insight the team unearthed.

People are curious, or perhaps concerned, about how exactly we are profiled as we travel around the web, and there is no way to find out definitively what traits are used to order, sort, and deliver the content we see. Rather than limit their survey to documented examples, the team described general situations where personalization might occur based on a variety of personal traits that had been either provided by the user or inferred based on their browsing history. For example, survey respondents were asked to rank their feelings of fairness based on situations like, “You are using a search engine. Your search results are filtered based on your gender, which was inferred from the webpages you visit and is accurate.” (There were over 50 scenarios like this one which were randomly presented—not all respondents were shown all situations.)

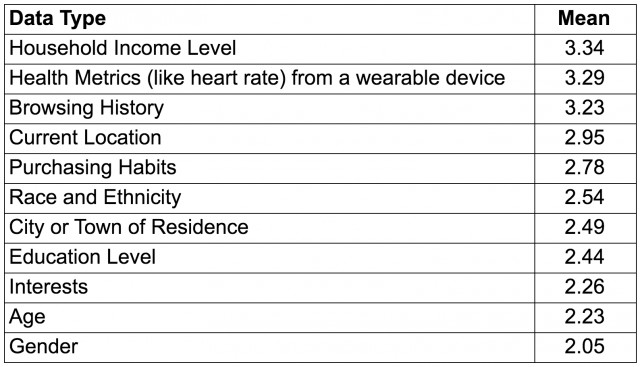

They were also asked to rate the sensitivity of different data types overall—specifically, to “indicate how sensitive you consider that information to be (even if some people and organizations already have access to it),” absent any context for how the information was collected or how it was being used.

Data Type Sensitivity Ratings

Scale: Not at all Sensitive (1), Not Too Sensitive (2), Somewhat Sensitive (3), Very Sensitive (4)

Is Personalization Based on Gender Perceived to be Fair?

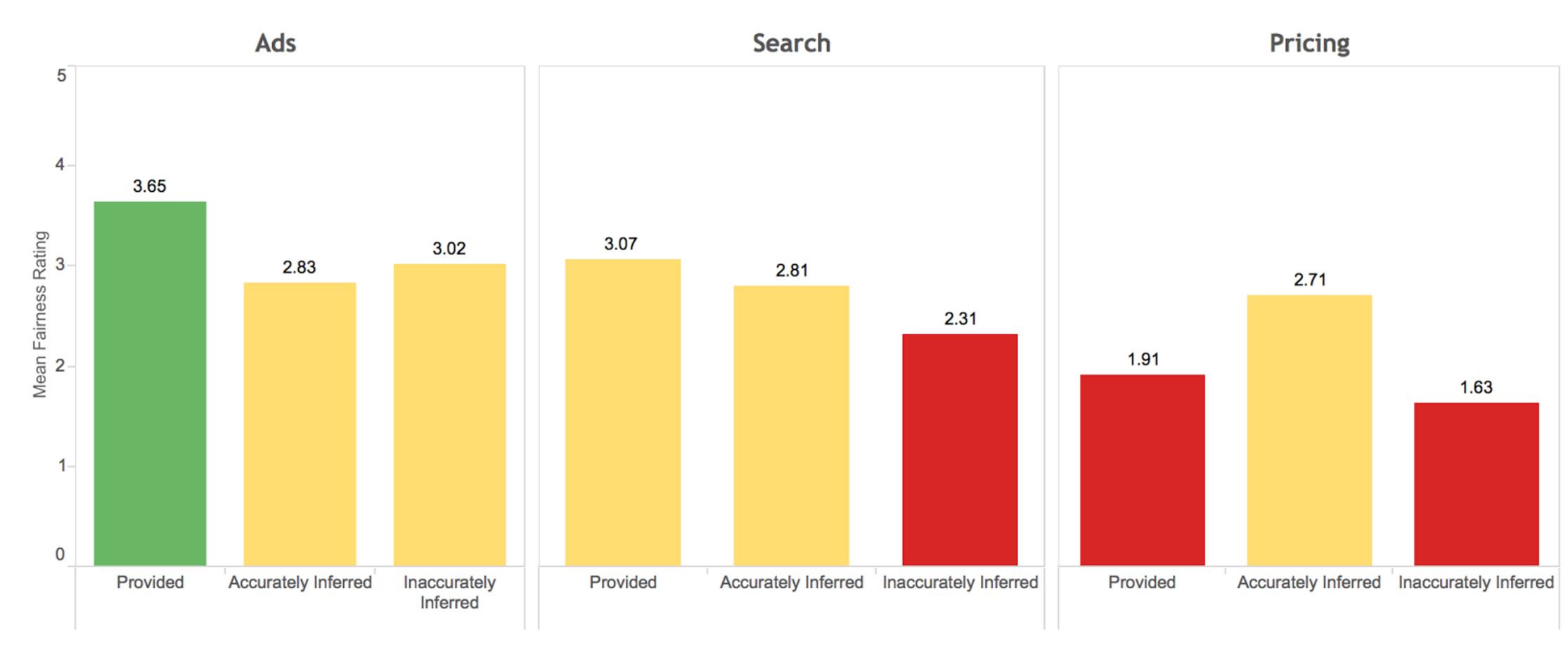

The responses to personalization based on gender varied depending on the context, making it a good example of the nuance revealed by this study.

Mean Fairness Ratings in Ads, Search, and Pricing Based on Gender Scenarios

Fairness Scale: Unfair (1); Somewhat Unfair (2); Neither Unfair nor Fair (3); Somewhat Fair (4); Fair (5)

As you can see, there was some ambivalence in gender-based advertising depending on how the information was obtained, but when users were asked about search results and pricing they felt it was significantly less fair to tailor results based on a user’s gender.

One important trend in the results was that the perception of unfairness was higher when the context of the personalization was more consequential — meaning that the degree of perceived unfairness depends in part on the stakes of the decision. Although personalized advertising was perceived to be slightly unfair, it was far less controversial than pricing, with search falling somewhere in the middle.

This distribution may reflect that, while personalized advertising might result in the (relatively minor) offense of serving ads contrary to cultural values, search and price both mediate access to information or products. Thus personalization within these latter contexts has more substantial consequences. For companies considering how to internalize these results, the lesson from this observation is that personalization will face higher chances of making users feel they are being treated unfairly. Because of this, personalization in high stakes domains should be treated carefully and – in particular – should not be based on the most sensitive traits.

The perceptions of personalization based on gender are particularly interesting against the backdrop of the low sensitivity rating given to gender in the overall “Data Type Sensitivity Ratings.” This perhaps suggests that, while individuals don’t consider their gender sensitive per se, they are concerned with the implications of personalizing some content based on this factor. In particular, personalization based on gender within search and pricing was perceived as unfair. One respondent observed about search results, “I don’t think my gender provides enough information to improve results, and I worry that the filtering will be based on gender stereotypes or lead to people of different genders having access to different information.” Clearly a bad outcome.

These results should inform future design choices

Automated personalization will continue to influence the content we see online and how services are tailored for each individual, and will likely spread to many new venues in the coming years.

Advertising based on gender is widespread across the internet. It may seem insurmountable to reduce the gendered nature of advertising, but companies would be well-served to take these results seriously. The economic foundation of the commercial internet is a derivative of the long-standing relationship between advertisers and media outlets: marketers pay to gain the attention of a website’s visitors. While users may be comfortable with being exposed to ads in exchange for content, they still want to feel that they are being treated with respect by the first-party and that the advertisements reflect cultural norms.

The decisions behind the personalization process already have a tremendous impact in how we view the world around us, and can influence what opportunities we are afforded. Ensuring that personalization is based on factors that are fair and equitable is important for both businesses and society. The technical and human processes powering online personalization currently exist without a feedback mechanism to request, process, and implement feedback from individuals on their perceptions. The results shared here are only a small sliver of the total data the survey team collected, and the full extent of the results could have tremendous implications for future design choices. Stay tuned for the full paper later this summer!